|

Yibo Zhang | 张轶博 I am currently a Ph.D. student in the Intelligent Content Learning (ICL) Group at Jilin University, supervised by Professor Rui Ma , where I am also involved in joint training at the Shanghai Innovation Institute (SII). I graduated from Jilin University in 2023 with a Bachelor degree in computer science. During undergraduate, I also interned at Zhejiang Lab, Huawei and ByteDance. I am also a member of the PaperABC team on the Bilibili video platform, responsible for sharing cutting-edge research in 3D AIGC. Welcome to keep following us! |

|

News

|

ResearchMy research interests lie in computer graphics and computer vision. I am particularly focused on 3D content generation and world model. Some of my papers are highlighted. |

|

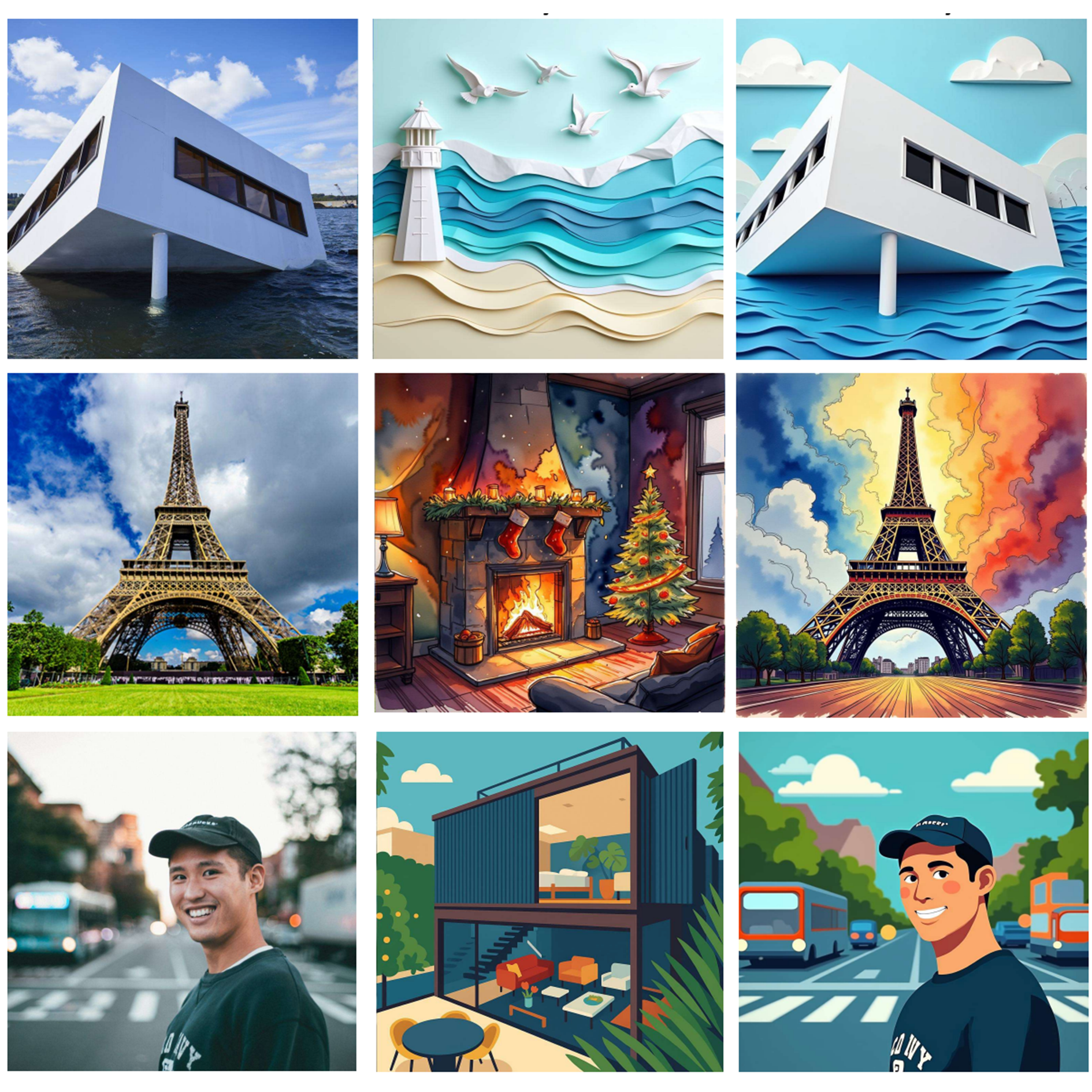

Ye Wang, Zili Yi, Yibo Zhang, Peng Zheng, Xuping Xie, Jiang Lin, Yilin Wang, Rui Ma arXiv preprint Project / Paper We propose OmniStyle2 that reframes artistic style transfer through destylization, a process that removes stylistic elements from artworks to recover clean, style-free content. By constructing the large-scale DST-100K dataset with this approach, we enable a simple feed-forward model that consistently surpasses state-of-the-art methods. |

|

Yibo Zhang, Li Zhang, Rui Ma, Nan Cao arXiv preprint Project / Paper We propose TexVerse, a large-scale 3D dataset featuring high-resolution textures. TexVerse collects over 858K unique high-resolution 3D models sourced from Sketchfab, including more than 158K models with physically based rendering (PBR) materials. |

|

|

Miaowei Wang, Yibo Zhang, Rui Ma, Weiwei Xu, Changqing Zou, Daniel Morris CVPR, 2025 Project / Paper We propose DecoupledGaussian that separates static objects from their contacted surfaces in in-the-wild videos, enabling realistic object-scene interactive simulations. |

|

|

Yibo Zhang, Lihong Wang, Changqing Zou, Tieru Wu, Rui Ma ICLR, 2025 Project / Paper We propose Diff3DS, a novel differentiable rendering framework for generating view-consistent 3D sketch. Diff3DS enables end-to-end optimization of 3D sketches via gradients in the 2D image domain, supporting novel tasks like text-to-3D sketch and image-to-3D sketch. |

|

|

Songchun Zhang, Yibo Zhang, Quan Zheng, Rui Ma, Wei Hua, Hujun Bao, Weiwei Xu, Changqing Zou CVPR, 2024 Paper We propose 3D-SceneDreamer that provides a unified solution for text-driven 3D consistent indoor and outdoor scene generation. |

Industrial Experience

|

|

Website design from Jon Barron. |